Even More Computational Thinking

Unfortunately we can't escape the 🧇.

Miscellaneous Definitions:

- Computable problem = a problem where every instance of it can be solved by an algorithm in a finite number of steps.

- Theoretical approach = some magical method quicker than brute force, which the textbook conveniently neglects to explain.

- Intractable problems = problems for which there are no efficient algorithms to solve them (e.g. the Travelling Salesman Problem). They may initially be solved by brute-force until it becomes impossible to find the optimal solution in a reasonable amount of time.

- Time complexity = the time taken for an algorithm to run relative to the input size (measured using Big O Notation).

Thinking logically:

- The Structured Programming Approach uses only three basic programming structures (sequence, selection and iteration) and aims to improve clarity and maintainability of programs. Each block should have a single entry and exit point, hence breaking out of loops is not recommended for structured programming

- Block-structured Programming Languages (e.g. Python, Pascal) allow use of just these three control structures.

- Most logic errors in programs occur at decision points and in decision conditions. A trace table can be used to test the logic of algorithms.

- Pseudocode (a mix between programming syntax and human language) can be used to design algorithms. It corresponds closely to the iteration structures of actual programming languages.

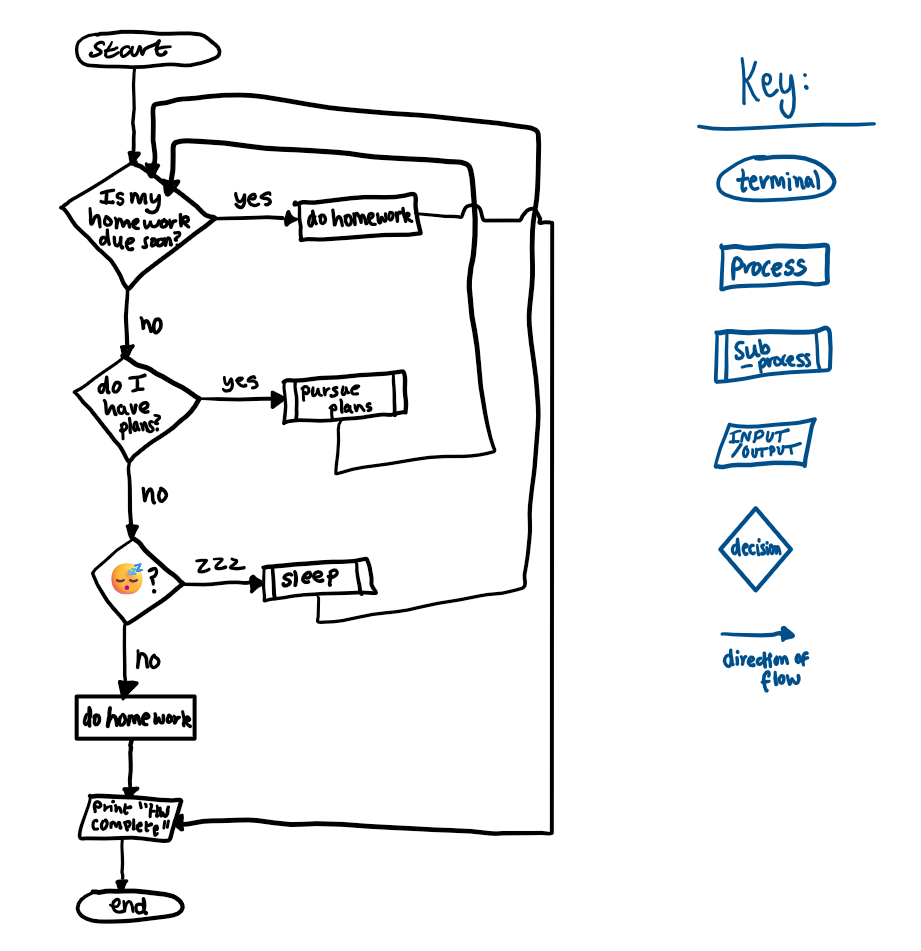

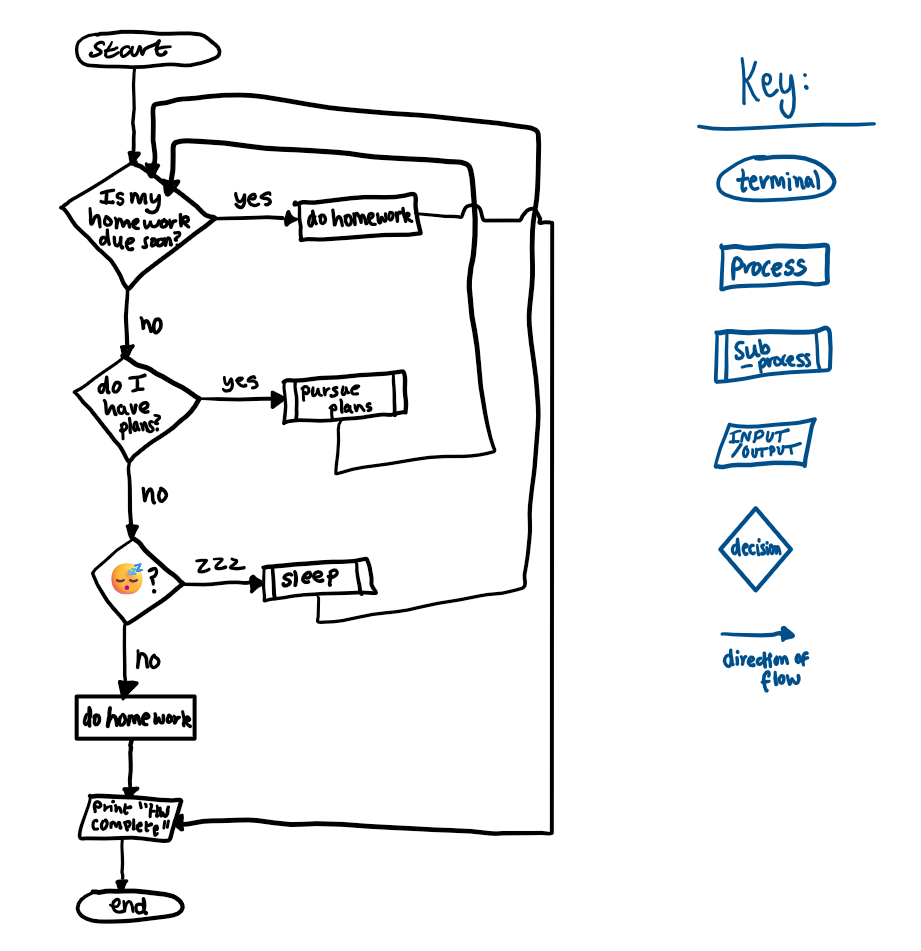

- Flow charts are another way of designing algorithms. Flowcharts are useful to visualise a sequence of different procedures, decisions and outcomes.

Figure 1: Flowchart symbols key and example

Thinking concurrently:

Concurrent processing is when several processes are running with each process being given a slice of processor time in turn. It appears that several tasks are being performed simultaneously, even though only a single processor is being used.

✅ Increases program throughput (number of tasks completed in a given time increases).

✅ When the processor is waiting for user input another task can be performed instead of wasting time.

❌ If a large number of users are trying to run multiple programs involving a lot of computation, it takes longer for the tasks to be completed.

Parallel computing requires multiple processors that each execute different instructions simultaneously. It aims to speed up computations and cannot be done on a single processor.

✅ Repetitive calculations that are carried out on a large amount of data can be performed simultaneously on different processors, improving processing speed.

✅ Can be used for graphics processing (e.g. rendering 3D objects by simultaneously working on different components of the graphic).

❌ There is an overhead in coordinating the processors.

❌ Some tasks run faster with a single processor than multiple processors.

Problem solving:

🤔 Typical methods and strategies:

- Enumeration involves trying all possible solutions until the correct one is found. This is also known as an exhaustive search and it becomes inefficient as the size of the problem increases.

- Simulation involves designing and/or building a model of a real system, in order to understand its behaviour and evaluate different strategies for its operation. Simulation may be used for problems such as financial risk analysis, population predictions and queueing problems. Abstraction is used when simulating a system.

- Divide and Conquer involves reducing the size of a problem at every iteration. A well-known example is a binary search.

- Problem Abstraction involves removing details until a problem is reduced to one that has already been solved. For example, a chess problem can be abstracted by creating a graph representing all possible moves from each square and then “unfolding” the graph.

- Automation involves building and then using models to solve problems. Automation typically replaces repeatable processes and minimises manual intervention.

- Clear visualisation is important to make a problem easier to understand and solve.

- Backtracking is a methodical method where different sequences are tried out until you find the one that leads to a solution. Backtracking is useful when you don’t have enough information to know initially what the best sequence is. An example is solving a maze, which involves returning back to a previous point at dead ends.

⛏️ Data mining:

Data mining involves digging through large data sets to discover hidden connections and predict future trends that may occur in the data. Big data refers to large data sets that cannot easily be handled using traditional databases and methods. Data mining is often performed using software such as analytics tools.

⌛ Heuristics:

Heuristic methods use algorithms which are not guaranteed to be optimal, but are sufficient to find an adequate solution. These methods often sacrifice accuracy or precision for finding a solution within a quicker time frame.

📈 Performance modelling:

Performance modelling involves simulating different user and system loads on a computer using a mathematical approximation. This is often cheaper and easier than actual performance testing, and the results can be used to plan a system suitable to the specific requirements.

➡️ Pipelining:

Pipelining involves splitting tasks into smaller parts and overlapping the processing of these task parts. For example, while one instruction is being fetched, another is being decoded and another executed. Pipelining is commonly used in microprocessors in PCs.